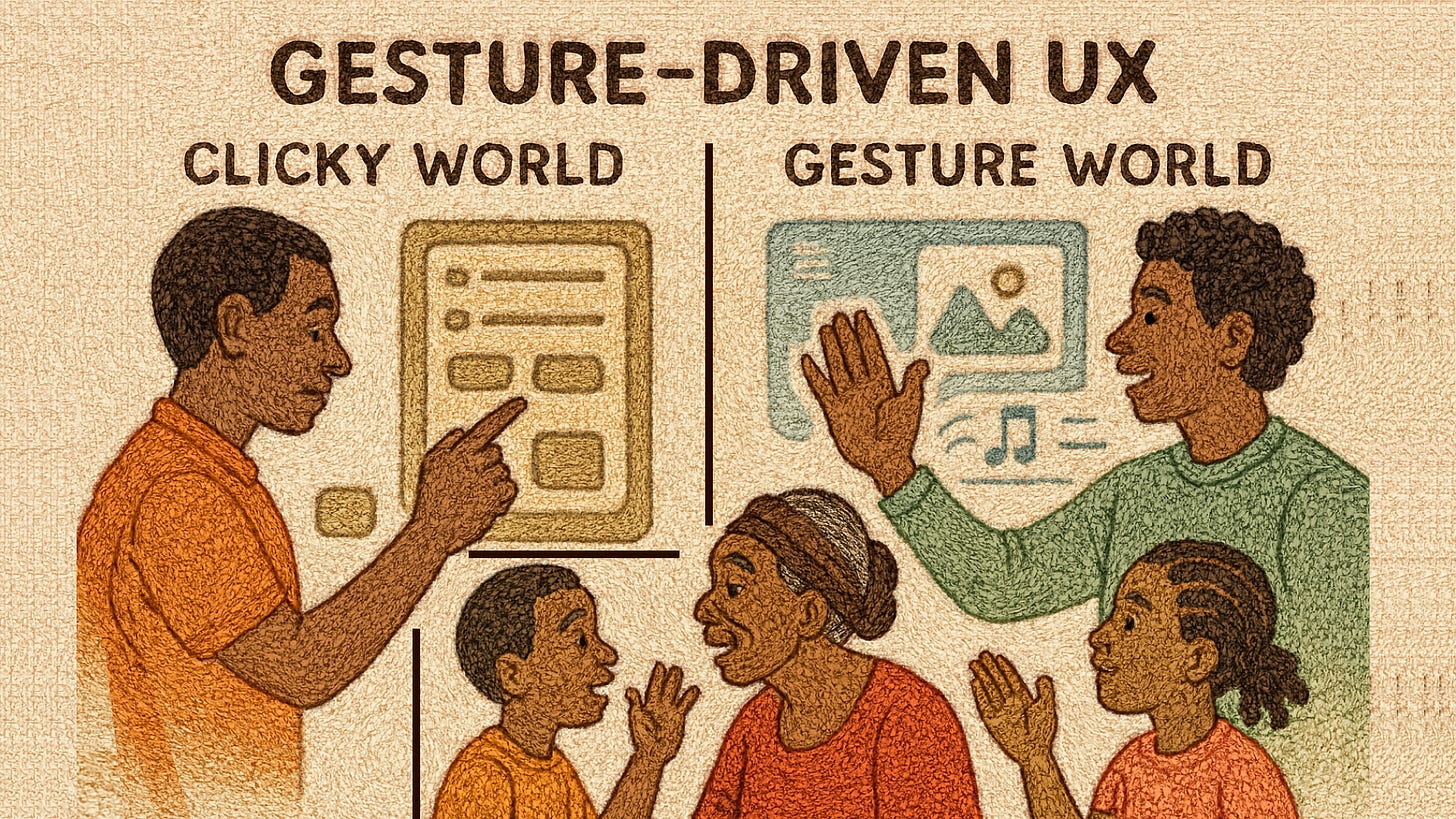

Gesture-Driven UX: The Future of Interaction Beyond Clicks

What Igbo storytelling can teach us about building more intuitive, gesture-driven user experiences.

I still recall sitting at my grandpa’s courtyard (Mbaraezi) back in Igboland, watching elders, adults gesticulate with their whole bodies when telling stories. Hands fly, fingers point, heads nod, arms rise. For us kids, it felt like magic how a wave, a swing of the hand, or a finger pointed could carry meaning louder than words.

Fast forward to today, those childhood moments make me think differently whenever I design. Because in UX, rarely do we replicate full-body storytelling. We settle for clicks, taps, scrolls. What if we build interfaces that honour gestures the way my grandfather honoured storytelling with fullness, with meaning?

What is Gesture-Driven UX?

Gesture-Driven UX means interfaces where user interaction happens not only (or even mostly) with clicks or taps, but with gestures like swipes, pinches, air-swipes, touchless motions, etc. It’s especially growing in AR/VR, smart home controls, wearables, and touchless gesture systems.

It’s not brand new, but it’s reaching a tipping point because sensors, cameras, and AI are becoming accurate enough to decode human motion reliably.

Igbo Wisdom & Less Click, More Gesture

Back to those childhood days, in Igbo culture, we have sayings, songs, proverbs, and dance. A single raised hand or pointed finger can say “come here,” “this way,” or “look over there.” It’s intuitive. It’s learned. It’s universal among people who grew up with it. Even children know these gestures before they learn full sentences.

That intuitive understanding is what gesture-driven UX needs to borrow. We designers need to recognize that many gestures are already part of people’s cultural and physical habits. If we align UX gestures with what people already know, the interface becomes more natural, with less learning curve, and feels like home.

Case Study: Gojek’s Floating Gesture Navigation

Gojek Indonesia, a ride-hailing super-app in Indonesia, made a change in 2020. Before: they had a “more” menu icon, which users tapped to see all the services. The “more” menu was often buried and less visible. Users told them that finding services was tedious.

They redesigned navigation to use a floating gesture-based tab at the bottom of the screen. This floating navigation always appears, and is easy to swipe up, or gesture to bring up the full services menu. So instead of always hunting for the “more” icon, you perform a gesture (swipe up) or press on a floating bar.

Some users took a while to figure out where to swipe, but once they did, their workflow became faster, more efficient. Gojek reduced friction in discovering services, made the experience more delightful for frequent users. Gojek app

This is exactly gesture-driven UX in action: removing extra clicks, anticipating what users want to do often, and letting them perform that frequently done task with a gesture rather than navigating deep menus.

AI & Gesture Recognition: Growing Possibilities & Challenges

To make gestures work well, AI is key. Gesture recognition systems must handle:

Variability (height, skin tone, lighting)

Speed of motion

False positives (unintended gestures)

Privacy (cameras, tracking)

There is a recent academic case: Accessible Gesture-Driven Augmented Reality Interaction System (Wang, 2025). This system uses vision transformers, temporal convolutional networks, and graph attention networks to recognize hand/body gestures from wearable sensors or cameras, adapting interface layouts to users with motor impairments. They found about a 20% improvement in task completion efficiency and 25% increase in user satisfaction for motor-impaired users compared to baseline AR systems.

That study is promising because it doesn’t assume all users perform gestures the same way. It adapts. It respects variance. It builds interfaces that accommodate differences, just like my grandfather’s storytelling respected different voices in the courtyard.

Applying Gesture-Driven UX: What Designers Should Think About

Here are lessons I’ve collected, from childhood gestures to AI case studies:

Observe natural gestures in your user’s world: Maybe at home, in my grandpa’s courtyard like I did, or in your market. What gestures do people use? What motions are intuitive?

Provide feedback for gestures: When a user swipes in the air, something should respond. A visual cue, sound, vibration. Just like a parent nods when a child points. Without feedback, gestures feel like misses.

Design fallback paths: Not everyone can do large gestures. Some have limited mobility. Some are working in bright sunlight. So allow alternatives, buttons, voice, and taps.

Make gestures discoverable: If your interface expects swipe up, pinch, or air wave, guide the user first. Show a tip, animation, or practice mode like teaching someone that a wave means “hello,” not “swat the air.”

Respect privacy & avoid over-tracking: Gesture detection often uses cameras or sensors. Be transparent. Don’t collect more data than needed. If possible, process locally, not sending everything to the cloud, where it could be misused.

Gesture-Driven UX: The Future, But Grounded in Human Stories

Because gestures are human. They come before languages. They are built into us.

When design uses gestures well, it honors human habits. It connects with people. It reduces friction. It makes digital feel more natural.

When AI supports gesture recognition that is inclusive and adaptive, we don’t just get cool new interactions, we get interfaces that feel like extensions of people’s own bodies, their voices, their culture.

Attempts You Can Make

From now onward, whenever you design, tell yourself the following:

I will ask what gestures are natural in a user’s life.

I will prototype gesture-driven interactions early, test them with real people who will use them.

I will ensure fallback (tap, voice) exists.

I will consider how gesture data is collected and who it includes or excludes.

Because the future of UX is not just buttons and scrolls. It’s movement. Its expression. It’s the raised hand, the pointing finger, the wave, the pinch.

Author Spotlight

We’re so grateful to

for allowing us to share her story here on Code Like A Girl. You can find her original post linked below.If you enjoyed this piece, we encourage you to visit her publication and subscribe to support her work!

Join Code Like a Girl on Substack

We publish 3 times a week, bringing you:

Technical deep-dives and tutorials from women and non-binary technologists

Personal stories of resilience, bias, breakthroughs, and growth in tech

Actionable insights on leadership, equity, and the future of work

Since 2016, Code Like a Girl has amplified over 1,000 writers and built a thriving global community of readers. What makes this space different is that you’re not just reading stories, you’re joining a community of women in tech who are navigating the same challenges, asking the same questions, and celebrating the same wins.

Subscribe for free to read our stories, or support the mission for $5/month or $50/year to help us keep amplifying the voices of women and non-binary folks in tech.

Another excellent piece! Congratulations, Blessing!