The Messy Reality of AI Products After Launch

Early traction isn’t about traffic. It’s about signals.

10,000 people saw my AI conversation partner for Cantonese on Reddit. 92 users tried it out. Everything stayed online.

I thought I’d nailed the launch.

Then I checked response times: 5+ seconds per message.

My product worked perfectly on my laptop, but with real users? Cracks started showing.

Here’s what broke, what I fixed, and what I’m still figuring out.

My Server Didn’t Crash - It Crawled

After publishing the reddit post, I kept refreshing my monitoring dashboard, terrified something would crash.

Nothing crashed. But response times crawled from an average of 2 seconds to 5+.

The strangest part was that nobody complained about the slow response time. Either people were patient, or they assumed free AI tools are just slow.

I initially assumed I’d hit external API rate limits.

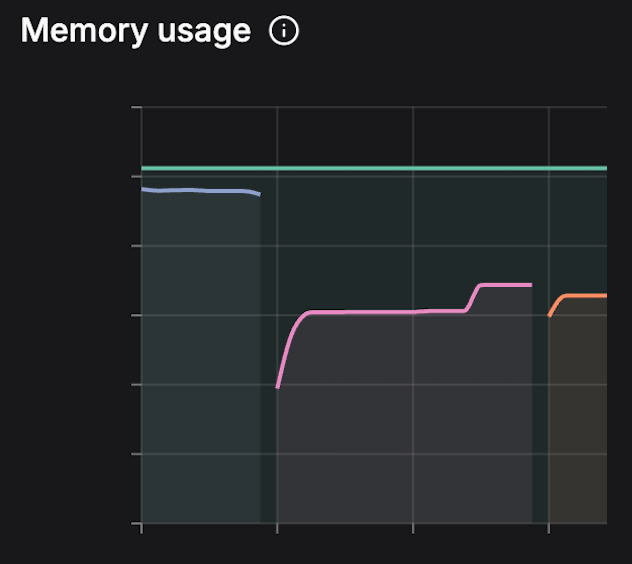

But I dug into my server logs and found the culprit: memory usage was > 85%. Each conversation was holding state in memory.

The fixes I made:

Upgraded the server (instant relief)

Slimmed down variables in memory

Consolidated database calls (many queries → one query)

Response times dropped back under 2 seconds.

What surprised me: Traffic wasn’t massive. But 92 people actually using the product revealed inefficiencies I’d never hit testing alone.

Takeaway for builders: Set up basic monitoring on day one. Without data, you’ll guess wrong. Track memory, response times, and query counts.

When 95% Accuracy Still Fails

A user commented on Reddit:

95% sounds great, right? That’s what I thought, until I saw users abandoning conversations mid-message. I checked the logs for transcriptions, and some of them were gibberish.

Here’s the mismatch:

Speech recognition models are trained on native speakers with perfect pronunciation and clear intonation.

My users are heritage speakers. They learned Cantonese from dinner table conversations, not textbooks. They have gaps in vocabulary, mixed tones, and uncertain pronunciation.

They’re using my product because their Cantonese isn’t perfect, but the model expects perfection.

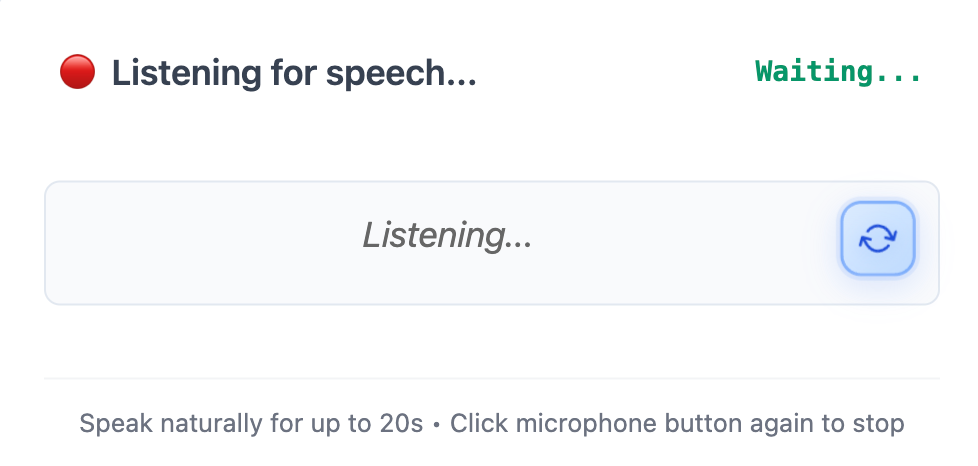

Quick fix: I added an audio retry button, so users can re-record.

Fine-tuning a model might improve accuracy from 95% to 98%, but that’s weeks of work. The retry button was a 3-hour patch that made failures less frustrating. Right now, speed matters more than perfection when I’m still validating the core problem.

Takeaway for builders: When you hit AI model limitations, ask yourself: can I solve this with product design instead of model improvements?

The Feature Request I never Saw Coming

A user messaged me: “Can you add a male voice? The female pitch makes the tones harder to hear.”

I hadn’t thought of that.

Cantonese has 6-9 tones. Pitch differences are subtle but crucial. For example, use the wrong tone and “expensive” becomes “ghost.”

For learners, a lower-pitched male voice makes it easier to distinguish between tones.

So, I added male and female voice options within 24 hours.

Takeaway for builders: I would never have thought about voice pitch affecting tonal language learning. The features that matter most come from listening to what makes the experience difficult for your actual users, not building what sounds impressive.

Early Signals

Here’s what actually happened two weeks after the Reddit launch:

92 users

232 conversations

1,064 total interactions

These numbers are tiny but telling. Not everyone stuck around, and that’s normal at this stage.

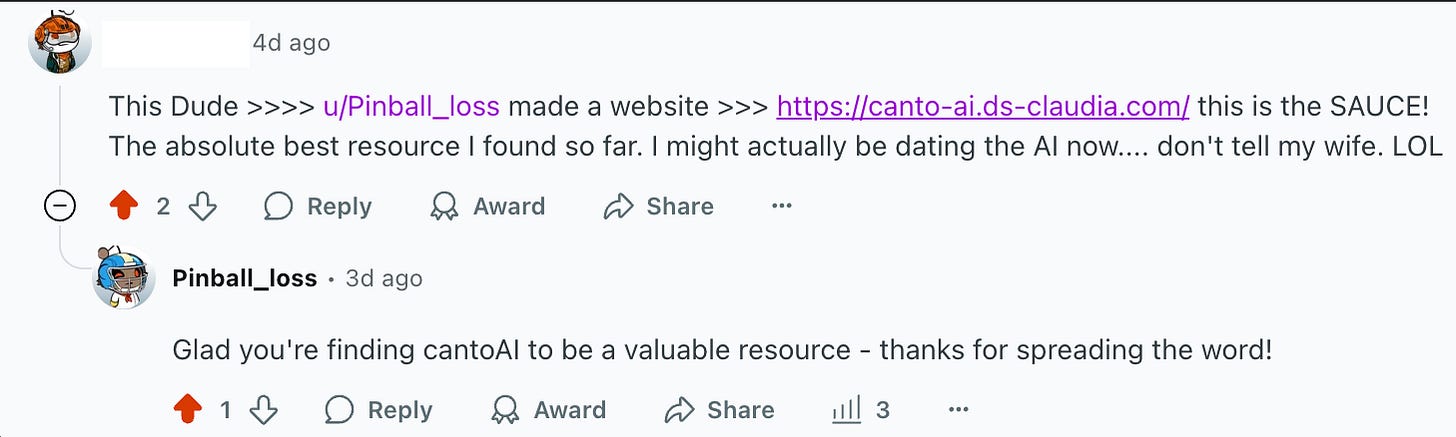

Yet one user cared enough to promote it unprompted in a different Reddit thread:

I didn’t ask them to, nor did I offer anything. That’s the signal I’m betting on!

What Happens Next

A few weeks ago, my biggest fear was posting on Reddit and hearing crickets.

Now my fear is different: spending three months building features nobody actually needs.

I can solve the technical challenges - memory issues, speech recognition failures, WebSocket complexity. I’ve debugged production ML systems serving millions. But I can’t code my way to the answer that matters most: will anyone actually use this habitually?

Part of me hopes I’m right. Part of me is already planning what to build next if I’m wrong.

If you’re building something: What challenges have you faced after launch?

Author Spotlight

We’re so grateful to

for allowing us to share her story here on Code Like A Girl. You can find her original post linked below.If you enjoyed this piece, we encourage you to visit her publication and subscribe to support her work!

Join Code Like a Girl on Substack

We publish 2–3 times a week, bringing you:

Technical deep-dives and tutorials from women and non-binary technologists

Personal stories of resilience, bias, breakthroughs, and growth in tech

Actionable insights on leadership, equity, and the future of work

Since 2016, Code Like a Girl has amplified over 1,000 writers and built a thriving global community of readers. What makes this space different is that you’re not just reading stories, you’re joining a community of women in tech who are navigating the same challenges, asking the same questions, and celebrating the same wins.

Subscribe for free to get our stories, or become a paid subscriber to directly support this work and help us continue amplifying the voices of women and non-binary folks in tech. Paid subscriptions help us cover the costs of running Code Like A Girl.